Projects

Deep Learning for Healthcare published research replication project.

Description: In this project another student and I replicated a paper titled, "Readmission prediction via deep contextual embedding of clinical concepts."

DownloadProblem: Hospital readmission following admission for congestive heart failure is harmful to patients and costly.

Solution: The goal of this paper was to target more intense readmission prevention interventions to patients at risk for readmission by predicting readmission. This is acheived by applying the proposed deep learning (neural network) architecture to clinical note electronic health records related to congestive heart failure. Recurrent neural network and latent topic models are combined in their model. These complement each other because RNNs are good at capturing the local structure of a word sequence, but they may have difficulty, "remembering" long-range interactions. In contrast, latent topic models don’t consider word order, but they do capture the general structure of a document.

Result: We achieved performance metric results within the ranges the authors published while applying the CONTENT model to synthetic data.

ii) Heart Failure Prediction Enhancement Using Advanced Autoencoder Architectures

Description: In this project, a breakthrough was achieved in heart failure predictive accuracy through the implementation of advanced autoencoder architectures. The focus was on leveraging PyTorch to engineer a suite of autoencoder models, including vanilla, sparse, denoised, and stacked variations.

view/downloadProblem: The challenge addressed in this research is enhancing the accuracy of predicting heart failure, necessitating the exploration of advanced autoencoder architectures.

Solution: The project exceeded performance benchmarks by utilizing Torchvision for meticulous data preprocessing, enabling the surpassing of rigorous accuracy benchmarks in hidden tests. Variational Autoencoders were employed to generate synthetic datasets, and the autoencoder architectures were developed and optimized to achieve efficiency in data compression and feature extraction.

Result: The implemented advanced autoencoder architectures resulted in a significant breakthrough, surpassing performance benchmarks and achieving enhanced predictive accuracy in heart failure prediction. The use of PyTorch and innovative autoencoder models contributed to the success of the project.

iii) Heart Failure Prediction with RETAIN: An Interpretable RNN Model

Description: Developed an interpretable predictive model for heart failure using RETAIN, an advanced RNN with a reverse time attention mechanism, achieving high accuracy in predictions using the MIMIC-III synthesized dataset.

view/downloadProblem: The challenge addressed in this research is enhancing the accuracy of predicting heart failure, necessitating the exploration of an interpretable RNN model with the RETAIN architecture.

Solution: Implemented dual attention mechanisms for nuanced analysis of patient visits and detailed feature relevance within visits, enhancing model interpretability and predictive precision in healthcare analytics. Pioneered in embedding techniques for healthcare data, transforming diagnosis codes into meaningful representations for sequential analysis. Crafted a context vector generation technique through weighted averaging of encoder hidden states.

Result: Trained and predicted heart failure cases with attention-based RNN, proving the model's efficacy in handling complex medical datasets and contributing to the advancement of interpretable machine learning in healthcare.

iv) Advanced ECG Classification with MINA: Multilevel Attention-Guided CNN+RNN Model

Description: Implemented MINA, an advanced CNN+RNN model, for binary classification of ECG signals, distinguishing atrial fibrillation from normal sinus rhythms with high accuracy.

view/downloadProblem: The challenge addressed in this project is the accurate classification of ECG signals into atrial fibrillation and normal sinus rhythms, demanding the development of an advanced CNN+RNN model.

Solution: Developed a Knowledge-guided Attention Module, integrating three distinct attention mechanisms (Beat Level, Rhythm Level, and Frequency Level) for nuanced signal analysis. Pioneered multilevel attention mechanisms in ECG analysis, leveraging beat, rhythm, and frequency features for signal interpretation.

Result: Achieved significant accuracy in binary classification of ECGs through model training and evaluation, proving the efficacy of MINA in detecting atrial fibrillation.

Walmart Sales Forecasting

Description: Sales or revenue forecasting is a critical approach to planning for the future of retail operations effectively and efficiently. Walmart, a leading retailer in the USA, wants to forecast sales for their product categories in their stores based on the sales history of each category. This is based on the Kaggle Walmart store sales forecasting competition.

view/downloadProblem: Forecast weekly Walmart sales by store and department.

Solution: Pre-process and apply PCR (Principal Components Regression).

Pre-processing: We extract a set of weeks from the training dataset that corresponds to the set of weeks that must be predicted in each of the 10 folds to train a model specific to that fold. That is if we are predicting weeks 7 through 14 of 2021, we use a data set containing weeks 7 through 14 of 2020 to train a model. Then, we extract the data for the pairs of stores and departments that appear in the training and testing datasets we created. This results in 10 training and 10 testing datasets each of which has a unique range of dates and store-department combinations.

PCR (Principal Components Regression): First we centered the data. We subtracted the mean of each week’s sales from each store’s weekly sales for that week. Then we performed SVD (singular value decomposition) to decompose training data such that singular values can be extracted. Next we reconstructed a transformed data matrix using the eight largest of the singular values to create a transformed centered data matrix. Finally, we added the predictor’s means back to their corresponding values. We added each week’s mean weekly sales to each store’s weekly sales for that week to create the data matrix used to forecast.

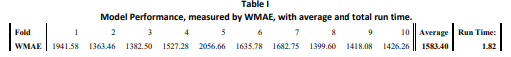

Result:Table of Weighted Mean Absolute Error values for each fold and their average.